What is Load Balancer?

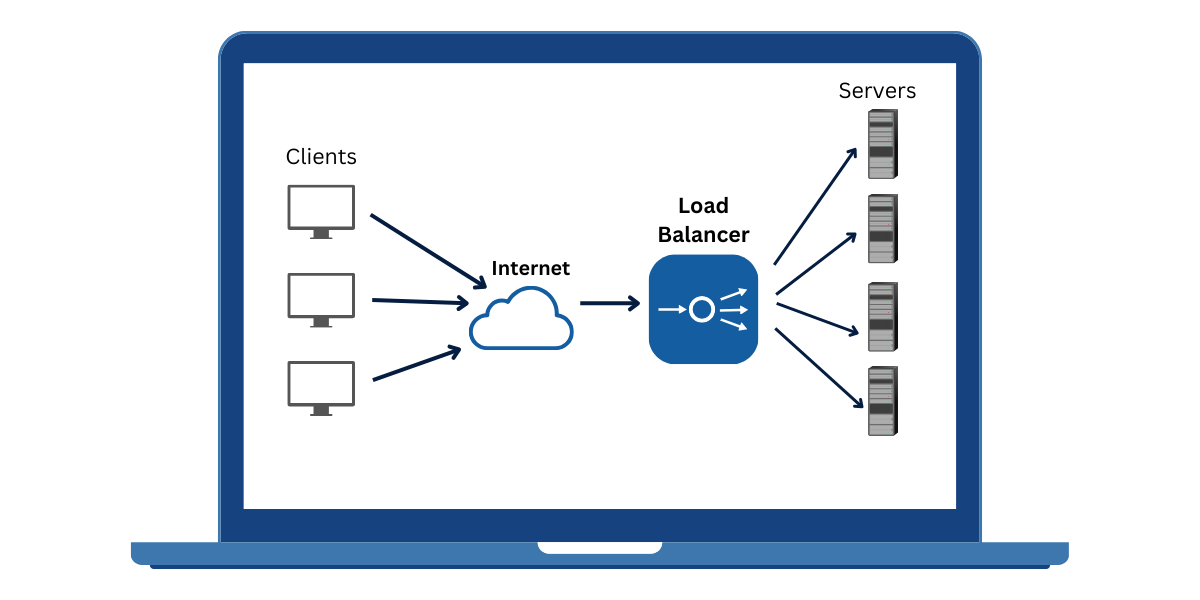

A Load Balancer is a device that acts as a reverse proxy and distributes network or application traffic across a number of servers. It is used to increase application capacity (concurrent users) and reliability. Load balancers improve application overall performance by decreasing the burden on servers associated with managing and maintaining application and network sessions and performing application-specific tasks.

Load balancers are a core component of modern IT infrastructure that helps solve these issues. In simple terms, a load balancer acts as a reverse proxy, sitting between clients and backend servers and distributing requests across multiple servers to prevent anyone from being overwhelmed. This improves overall application performance and availability.

Key Capabilities Provided by Load Balancers

- Increased capacity: Ability to handle more simultaneous users and traffic across multiple servers

- Reliability: Failed servers can be automatically taken out of rotation

- Flexibility: New servers can be added without interrupting service

- Security: Protect against DDoS attacks by shifting attack traffic

- Performance: Users get faster response times with reduced server load

- Scalability: System capacity can be easily expanded by adding servers

- Maintenance: Individual servers can be taken down for updates without downtime

Key Takeaways

- A load balancer sits between client devices and backend servers, distributing requests across multiple servers to prevent anyone from being overwhelmed.

- Load balancers improve application performance by decreasing load on individual servers, managing traffic, and providing additional security.

- Common load-balancing algorithms include round robin, weighted round robin, least connections, and IP hashing.

- The major types of load balancers are L4/L7, hardware/software, and global/local. L4 operates at the network layer, and L7 at the application layer.

- Benefits include increased capacity, reliability, flexibility, and security. Load balancers can also offload SSL encryption, caching, compression, TCP connection multiplexing, and more.

- Load balancers require hardware or virtual appliances, proper configuration, and maintenance. AWS, Azure, and GCP offer managed load-balancing services.

How Does a Load Balancer Work?

Conceptually, a load balancer acts as a single point of contact for client requests to a web-based service or application hosted on multiple servers. The load balancer sits in front of the servers and receives incoming network and application traffic.

Based on configured rules and policies, the load balancer evaluates incoming connections and requests and determines which backend server should handle each request. It then forwards the request to the selected server over a private network connection.

After getting the response from the backend server, the load balancer sends it back to the appropriate client. Clients only see and connect to the load balancer’s IP address. They have no visibility or direct connection to the servers behind them.

This architecture provides a single, unified access point with a consistent IP address for clients connecting to the service. Clients don’t need to keep track of multiple server IP addresses or worry about an individual server going down.

The load balancer also tracks the status of backend servers, pulling non-responsive nodes out of the pool to maintain overall system health and prevent failed servers from being sent traffic. This provides high availability for the application or service as a whole.

Additional capabilities like SSL offloading, caching, compression, and TCP connection multiplexing allow load balancers to further improve performance and security compared to clients connecting directly to servers.

Load Balancing Algorithms

One of the key tasks of a load balancer is determining which backend server should handle each new request or connection. Several common load-balancing methods or algorithms are utilized:

- Round Robin: Requests are distributed sequentially across the group of servers, and each server is hit in a rotating order. This is a simple and very commonly used algorithm.

- Weighted Round Robin: Servers with higher weights receive more requests than others in proportion to their weights. This is useful when servers have different capabilities.

- Least Connections: The server with the fewest active connections receives the next request. Good for keeping similar servers evenly loaded.

- IP Hash: The client’s IP address is used to determine which server receives the request. This ensures that requests from a client consistently land on the same server.

Many load balancers also support custom load balancing algorithms that can implement additional logic like detecting server load levels, proximity-based routing, etc. Random probability distributions are sometimes used as well.

The load balancing method can have a significant impact on overall application performance and behavior, so it should be configured carefully with testing and monitoring.

Load Balancer Types

There are several ways we can categorize load balancers based on different architectural and configuration aspects:

- Layer 4 vs Layer 7

- Hardware vs Software

- Global vs. Local

Layer 4 vs Layer 7

- Layer 4: Operates at the transport layer of the OSI model (TCP/UDP). Layer 4 or L4 load balancers are fast, efficient, and add very little overhead, but they can only route connections based on IP/port information. They are also referred to as transport layer load balancers.

- Layer 7: Operates at the application layer (HTTP/HTTPS/WebSockets). Layer 7 or L7 load balancers allow routing decisions based on attributes like HTTP headers, URLs, and cookies. This enables more advanced load balancing functionality tuned for specific apps. The downside is added latency and processing overhead. They are also referred to as application-layer load balancers.

Hardware vs Software

- Hardware: Dedicated hardware appliances designed specifically for high-performance load balancing. Offer excellent throughput and processing power with advanced clustering options. However, they can be expensive and add hardware sprawl.

- Software: Load balancing software running on commodity servers. Provides flexibility and can leverage unused resources on existing servers. Performance and scalability may not match dedicated hardware.

Global vs. Local

- Global: Sit in front of all backend servers in a cluster and balance loads across them. Requires changes when adding/removing servers. Limited high availability configurations.

- Local: Configure local load balancers for each server or small group of servers. This simplifies high availability and scalability, and load is distributed more evenly relative to individual server capacity.

Load Balancer Features and Functions

In addition to basic load balancing of traffic across multiple servers, modern load balancers provide a robust set of features and capabilities:

- SSL/TLS Offloading: Decrypt inbound HTTPS traffic and pass unencrypted HTTP to backend servers, reducing computational load.

- Session Persistence: Route subsequent requests from a client to the same backend server, maintaining the application state. Enable with cookies, IP hashing, etc.

- Health Monitoring: Constantly check the health status of backend servers to detect failures and avoid sending traffic to downed nodes.

- Web Application Firewall: Protect against exploits like SQL injections, cross-site scripting, DDoS, etc.

- Caching: Store common responses and assets to return immediately instead of hitting backend servers.

- Compression: Compress outbound responses to reduce bandwidth utilization.

- TCP Multiplexing: Reuse backend connections instead of opening a separate connection for every request to reduce overhead.

- Rate Limiting: Control the rate of incoming/outgoing traffic to prevent individual connections or clients from utilizing excessive resources.

- Rewriting/Manipulation: Modify request/response headers, URLs, and payloads to handle versioning, restrict access, etc.

Load Balancer Use Cases

Load balancers provide crucial functionality for scaling and delivering high-performance, available applications and services in a wide variety of use cases:

- Websites and Web Apps: Distribute web traffic across multiple web/app servers, such as Apache and Tomcat. This enables horizontal scaling and zero downtime maintenance.

- Microservices: Route requests for each microservice to available service instances spread across the infrastructure.

- Cloud Computing: Public cloud computing platforms like AWS, Azure, and GCP leverage load balancers to distribute traffic for their critical services and customer applications.

- Containers: Container orchestrators like Kubernetes utilize load balancers to deliver traffic to containers and provide full-stack load balancing for container-based apps.

- APIs: APIs act as entry points for web and mobile apps to access backend services. Load balancers handle large volumes of API requests and scale API gateways.

- Mobile Apps: Mobile apps manage user sessions across multiple servers and geo-distributed infrastructure using load balancing.

Load Balancer Architectures

There are several common load balancer architectures and topologies utilized:

- Single Load Balancer: A single load balancer distributes traffic across multiple backend servers. Provides basic load balancing with a single point of failure.

- Active-Passive: Two load balancers, one active (handling traffic) and the other passive (on standby). The passive lb takes over if the active goes down. Removes single point of failure.

- Active-Active: Multiple active load balancers handling traffic together and spreading the load between them. Increases capacity and availability.

- Two-Tier: Combination of multiple first-tier global load balancers and second-tier local load balancers closer to backend servers. Improves scalability.

- Multi-Site: A single global load balancer fronts multiple local load balancers, one at each physical site/data center. Enables geography-based load distribution.

- Cloud Hybrid: Load balancers span public cloud and on-premise environments, routing traffic between them.

Each design involves tradeoffs in terms of complexity, cost, and performance. The optimal load balancer architecture depends on the specific application, traffic volume, and infrastructure.

How to Deploy Load Balancers

There are several options for deploying load balancers:

- Hardware Appliances: Purchase dedicated load balancer appliances from vendors like F5, Citrix, A10, etc. These appliances offer advanced features and performance tailored for load balancing.

- Software Load Balancers: Install open-source (HAProxy, NGINX, etc.) or commercial software LB products on commodity servers. Flexible and cost-effective.

- Cloud Managed Services: Use fully managed, on-demand load balancing services offered by public clouds like AWS Elastic Load Balancing, Azure Load Balancer, and GCP Load Balancing. Serverless, autoscaling.

- Container Native: Kubernetes, Docker Swarm, and other container orchestrators include built-in load balancers designed specifically for container environments and microservices.

Key factors to consider when selecting a load-balancing solution include traffic levels, use case complexity, high availability requirements, hardware vs. software preferences, and integration with existing infrastructure and tools.

Load Balancer Maintenance

Properly maintaining load balancers is essential for optimal performance and availability. Key maintenance activities include:

- Monitoring health status, network traffic, latency, and other metrics

- Applying security patches and firmware/software updates

- Adding and removing backend servers as capacity changes

- Modifying load balancer configurations for new app features/behavior

- Testing failover to validate high availability

- Tuning load balancing algorithms and connection settings

- Upgrading to newer hardware or software load balancer versions

- Backing up and restoring load balancer configurations

- Scaling load balancers to handle increased traffic demands

- Troubleshooting issues and outages

Robust maintenance helps ensure load balancers operate smoothly even as application requirements evolve.

What are the Challenges of Load Balancer

While extremely useful, load balancers also come with certain challenges IT teams should be aware of:

- Single Point of Failure: A load balancer failure can disrupt access to the whole application, requiring high-availability load balancer configurations.

- Overloading: A load balancer can still become a bottleneck if it lacks the processing capacity to handle peak traffic demands. This requires planning and testing.

- Increased Complexity: Load balancers add more components and connections to monitor and manage.

- SSL Overhead: Decrypting and re-encrypting HTTPS traffic imposes a significant CPU load. SSL offloading can optimize this.

- Sticky Sessions: Solutions like cookies are required to handle user sessions that must be pinned to the same backend server, which adds complexity.

- Logs and Metrics: Debugging and monitoring can be more difficult when traffic goes through load balancers instead of directly to servers.

- Testing and Maintenance: Backend upgrades and app changes must be tested through a load balancer to catch issues.

Final Thoughts

Load balancers are a foundational technology for scaling web applications and providing highly available services. They improve performance by reducing server load, enabling maintenance without downtime, and simplifying capacity expansion.

Various algorithms, architectures, and deployment options provide flexibility to handle diverse use cases and traffic levels. Combined with health checks and failover capabilities, load balancers play a critical role in delivering robust and resilient applications.

Organizations should strategically adopt load balancing – whether through cloud services, software, or hardware appliances: tailored to their specific application requirements and infrastructure. Given the ever-growing demands of internet-driven businesses, load balancing delivers an essential layer of infrastructure to smooth traffic flow and keep services running 24/7.

Frequently Asked Questions (FAQs) on Load Balancing:

What are the key benefits of using a load balancer?

Some of the top benefits load balancers provide include:

- Increased capacity to handle more traffic and simultaneous users

- Improved reliability through failover and removing single points of failure

- Flexibility to easily add/remove servers without interrupting service

- Enhanced security against attacks like DDoS

- Faster performance by reducing the load on individual servers

- Easier scalability by simply adding additional backend servers

- Smooth maintenance/upgrades by taking servers offline without downtime

What are the differences between Layer 4 and Layer 7 load balancers?

Layer 4 load balancers work at the transport layer and distribute connections based only on IP addresses and TCP ports. Layer 7 balancers work at the application layer, allowing routing decisions based on HTTP headers, cookies, URLs, etc., for more advanced load balancing.

Layer 4 is faster and simpler, but Layer 7 enables better application-level control.

When should I use hardware load balancers vs a software solution?

Hardware load balancer appliances provide very high throughput and ultra-low latency processing of network traffic. However, they can be expensive. Software load balancers offer more flexibility and leverage commodity server hardware.

For very high-traffic sites handling millions of concurrent connections, dedicated hardware balancer appliances are likely the better choice. Software load balancers are more suited to smaller-scale applications.

How does load balancing help with scaling web applications?

Load balancers allow seamlessly adding backend servers to increase the capacity of web apps. Traffic is spread across new servers automatically without configuration changes. Load balancers provide a single access point, abstracting the infrastructure changes from clients. This horizontal scaling enables easily expanding capacity.

How do I choose the right load-balancing algorithm?

Round-robin balancing is simple and works well for many use cases with similar servers. For servers with different capacities, a weighted round-robin is better. Least connections are optimal when server loads vary. Use IP-based hashing for requests that must hit the same backend repeatedly.

There are many best methods for all cases. Testing different algorithms under load is important to tune for your specific app.

What are common load balancer high availability configurations?

Active-passive pairs with automatic failover are very common for load balancer high availability. Active-active load balancers that share traffic concurrently also increase availability. Multiple layers, like global + local load balancers, improve scalability and redundancy.

How can I monitor the health status of load-balanced servers?

Load balancers provide health-checking functionality to constantly monitor backend servers using pings, connection attempts, HTTP requests, etc. Unhealthy servers that do not respond to checks are automatically pulled out of rotation until they recover. This prevents failures and maintains availability.

Priya Mervana

Verified Web Security Experts

Verified Web Security Experts

Priya Mervana is working at SSLInsights.com as a web security expert with over 10 years of experience writing about encryption, SSL certificates, and online privacy. She aims to make complex security topics easily understandable for everyday internet users.

Stay Secure with SSLInsights!

Subscribe to get the latest insights on SSL security, website protection tips, and exclusive updates.

✅ Expert SSL guides

✅ Security alerts & updates

✅ Exclusive offers